Maxim Chernyshev and his team received the Best Paper Award at EAI ICDF2C 2025 for their innovative use of large language models (LLMs) in digital forensics. Their research demonstrates how AI can assist investigations while addressing the challenges of compromised systems. In this article, he shares key insights, findings, and practical implications from the award-winning study.

What happens when the systems we build to enhance our own productivity become targets themselves? That question drove our team including Zubair Baig, Naeem Syed, Robin Doss, Malcolm Shore, and myself, to explore how large language models (LLMs) could enhance and streamline digital investigations of compromised LLM systems. Our work earned the Best Paper Award at EAI ICDF2C 2025, but more importantly, it opened conversations about the future of AI-assisted digital forensics.

The Double-Edged Sword of LLM Integration

LLMs are everywhere encompassing chatbots, advanced business automation workflows, and intelligent agents. While, according to Deloitte, 42% of organisations report productivity gains from adopting LLMs, these systems introduce novel vulnerabilities that are challenging to investigate using traditional tools. Prompt injection attacks, where malicious instructions manipulate LLM behaviour, represent a particularly insidious security threat. Unlike traditional attacks, these injections leave scattered evidence across distributed logs, making investigations incredibly complex. But the same LLM technology creating these challenges could help solve them.

The Double-Edged Sword of LLM Integration

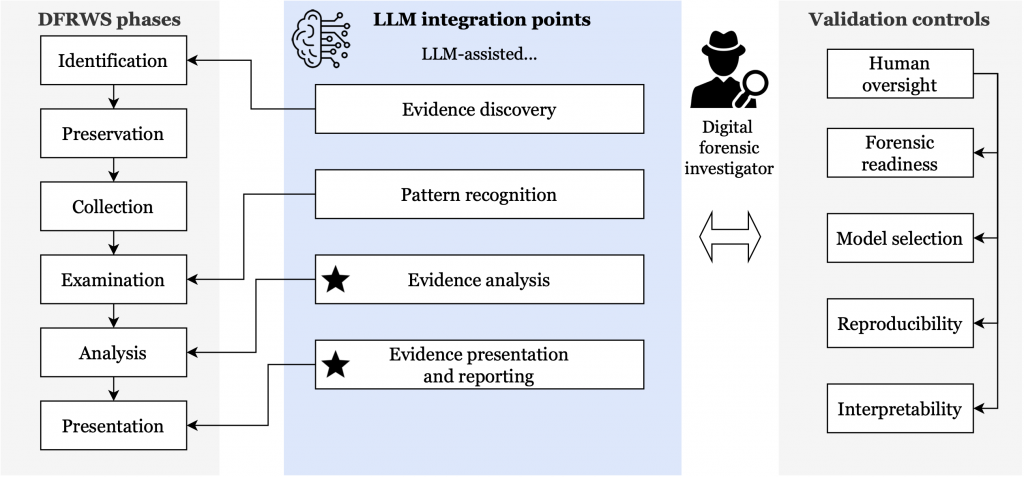

We developed a framework that extends the established foundational digital forensic process model with four strategic LLM integration points: evidence discovery, pattern recognition, evidence analysis, and evidence presentation. To maintain forensic integrity, we introduced five validation controls including human oversight, forensic readiness, model selection, reproducibility, and interpretability. This framework seeks to address the fundamental challenge of using non-deterministic AI systems in contexts requiring scientific rigor such as digital forensics.

Our Approach: Teaching LLMs to Investigate Themselves

We evaluated 12 state-of-the-art LLMs across 1,046 simulated attack scenarios, testing whether they could reliably classify compromised agent tasks and reconstruct forensic timelines in a structured way. Our framework introduced confidence threshold-based analysis, which teaches the system to express uncertainty, and subsequently lets investigators calibrate between catching every potential incident versus minimising false alarms.

Read more:

How ASCENT Revolutionized Smart Contract Testing: Best Paper Award at EAI BlockTEA 2025

We discovered that models like Claude 3.7 Sonnet achieved 86% accuracy in capturing critical forensic entities, while GPT-4o demonstrated remarkable consistency in generating executable code for timeline reconstruction. However, we also discovered significant trade-offs where higher confidence thresholds improved detection precision but substantially degraded recall, meaning that some attacks could be missed. This finding highlights why human oversight remains essential, whilst LLMs can accelerate analysis, investigators must still understand and manage these performance characteristics.

Why This Matters

Digital forensics faces mounting pressure through exponential data growth, increasingly sophisticated attacks, and massive case backlogs. LLM-assisted analysis isn’t about replacing human investigators, and it’s about giving them additional capabilities to process complex unstructured data, identify patterns, and generate reliable structured evidence representations automatically. Our framework bridges emerging AI capabilities with rigorous forensic principles, proposing specific integration points while acknowledging critical limitations around non-determinism and hallucinations inherent to LLMs.

💡 Interested in cybersecurity and digital forensics? Explore EAI Endorsed Transactions on Security and Safety.

Why EAI ICDF2C 2025?

The conference struck the balance between cutting-edge research and practical application. Whether you’re investigating AI systems, developing new digital forensic tools, or exploring cybercrime trends, ICDF2C offers a diverse community to present and exchange interdisciplinary ideas. It was an opportunity to gain new perspectives, meet potential collaborators, and explore a few research questions I hadn’t considered before. Future editions of ICDF2C would be recommended for those seeking to explore topics at the intersection of digital forensics and emerging technologies in the future

Maxim Chernyshev

Maxim Chernyshev is a PhD candidate at Deakin Cyber Research and Innovation Centre, Deakin University, specialising in AI-assisted digital forensics. His research develops frameworks for investigating security incidents in LLM-integrated applications and agentic systems, addressing the dual challenge of using large language models as both subjects of investigation and tools for forensic analysis. His work focuses on maintaining scientific rigor and evidential integrity when deploying AI capabilities in operational workflows.